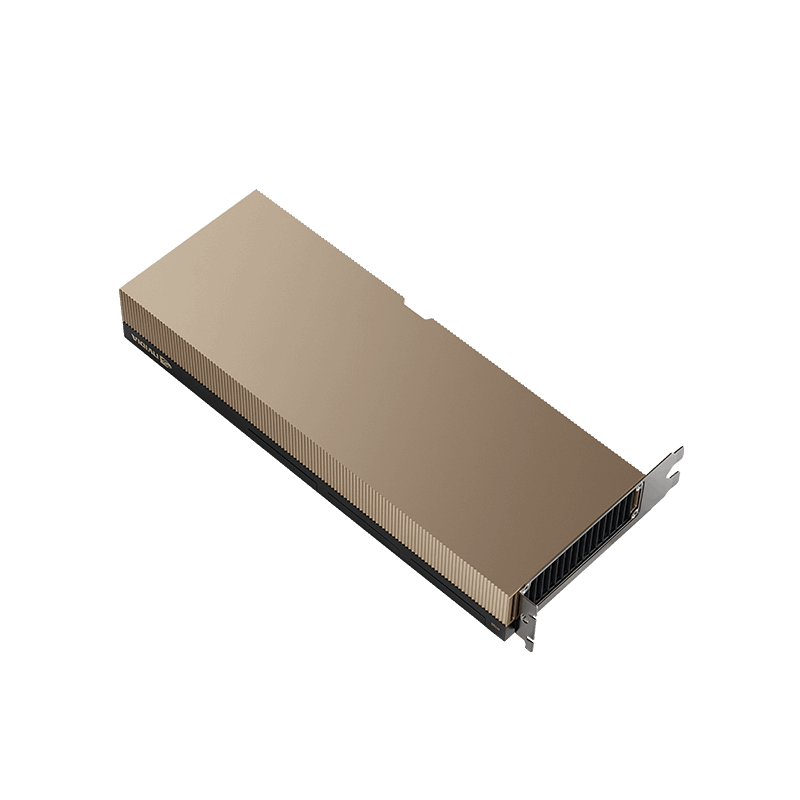

Unified Converged Accelerators for Compute and Networking

NVIDIA’s converged accelerators deliver a distinctive architecture that integrates the formidable processing power of NVIDIA GPUs with the advanced networking capabilities and security features of NVIDIA’s SmartNICs and Data Processing Units (DPUs). This synergy ensures optimal performance and robust security for I/O-intensive GPU-accelerated workloads, spanning from data centers to edge environments.

The NVIDIA A30X merges the A30 Tensor Core GPU with the BlueField-2 DPU, creating a balanced solution tailored for both compute and I/O-intensive applications, including 5G virtual RAN (vRAN) and AI-driven cybersecurity. Its onboard PCIe switch facilitates multiple services running concurrently on the GPU, enabling low latency and consistent performance.

High-Performance 5G Workloads

The A30X stands out as a high-throughput platform ideal for 5G deployments. By bypassing the host PCIe system, it significantly cuts processing latency and boosts data throughput, which translates to supporting higher subscriber densities per server. Working in tandem with NVIDIA’s Aerial SDK a software-defined framework for building cloud-native, GPU-accelerated 5G networks the A30X enables accelerated signal and data processing for vRAN infrastructures. Aerial’s fully software-defined PHY layer supports Layer 2+ functionalities and is designed for seamless programmability.

The combination of A30X and Aerial SDK offers commercial off-the-shelf (COTS) hardware compatibility, easing the deployment of Kubernetes-based platforms like NVIDIA EGX, which provides container orchestration for streamlined management. Available as a downloadable package or NGC container image, this duo empowers developers to build powerful GPU-accelerated 5G solutions.

AI-Powered Cybersecurity

The A30X opens new horizons in AI-enabled cybersecurity and networking. Featuring programmable Arm cores within the BlueField-2 DPU, developers can leverage NVIDIA’s Morpheus application framework to deploy GPU-accelerated advanced network functions such as threat detection, data leakage prevention, and anomaly profiling. High-speed GPU processing can be applied directly to network traffic, with data paths isolated between GPU and DPU, enhancing security. Morpheus provides cybersecurity teams with an AI-driven platform to filter, analyze, and classify massive streams of real-time data, delivering unprecedented threat detection and response capabilities across data centers, clouds, and edge locations.

Key Features of NVIDIA A30X

-

Unmatched GPU Performance

NVIDIA A30X is built around the powerful Ampere architecture GPU, which delivers exceptional processing capabilities tailored for modern AI workloads, scientific computing, data analytics, and other demanding applications. A standout feature is

Multi-Instance GPU (MIG) technology. MIG allows the GPU to be partitioned into multiple, fully isolated GPU “instances.”

This means that a single A30X card can serve multiple users or applications simultaneously without interference, boosting hardware utilization efficiency. For example, the GPU can be divided into four independent parts, each with its own dedicated resources, ensuring security and performance isolation. This feature is crucial in both bare-metal deployments (dedicated hardware) and virtualized/cloud environments where sharing resources securely and efficiently is a must.

-

Enhanced Networking and Security

Networking is as critical as raw computing power in today’s data-intensive workloads. The A30X integrates NVIDIA’s

ConnectX SmartNIC technology and

BlueField-2 DPU (Data Processing Unit). These components offload networking, storage, and security tasks from the main GPU and CPU, accelerating data transfers while ensuring that sensitive operations like encryption and firewall processing happen efficiently and securely.

The DPU is programmable and can run customized network functions, reducing overhead on the host system. This hardware-level offload and isolation mean better performance and improved defense against cyber threats, ideal for environments where data integrity and speed are paramount.

-

Superior Data Efficiency

A key innovation in the A30X design is the integrated

PCIe switch. Normally, data traveling between the GPU and networking components would need to pass through the server’s main PCIe bus, which can create bottlenecks.

The A30X’s internal PCIe switch allows data to be routed directly between the GPU and the network interface (DPU) without involving the host PCIe system. This results in drastically reduced latency, higher bandwidth, and improved overall efficiency. For I/O-heavy applications like 5G signal processing or AI inference with large data flows, this can significantly enhance throughput and responsiveness.

-

Enterprise-Grade Utilization

NVIDIA’s MIG technology combined with container orchestration tools like

Kubernetes empowers IT teams to allocate GPU resources dynamically across multiple users or applications. This flexibility maximizes utilization instead of a GPU being idle or underused, it can be partitioned and shared effectively.

Moreover, MIG guarantees quality of service (QoS), ensuring each workload gets the necessary performance. This capability is vital in multi-tenant data centers, cloud platforms, or enterprises where varied AI and HPC tasks run concurrently. Supporting hypervisor-based virtualization further integrates A30X into modern cloud-native and hybrid IT infrastructures, enabling easy management and scaling.

Advantages of NVIDIA Converged Accelerators

-

Robust Enterprise Security and Performance

By combining NVIDIA’s Ampere GPU architecture with BlueField-2 DPU capabilities in a single card, the A30X delivers unmatched processing power with fortified security. Its dedicated PCIe Gen4 switch creates a high-speed, predictable data pathway between GPU, DPU, and network, minimizing latency and avoiding bottlenecks—crucial for applications like 5G signal processing.

-

Advanced Security Isolation

Data generated at the edge can be encrypted and transmitted directly across the network without traversing the server PCIe bus, reducing exposure to network threats and protecting host systems.

-

Intelligent Networking

The embedded BlueField-2 DPU supports NVIDIA ConnectX-6 Dx features, enabling direct GPU processing on network traffic. This capability powers innovative AI-based networking applications, including data leak prevention and network performance optimization.

-

Cost-Effective Scalability

Combining GPU, DPU, and PCIe switch on one card allows organizations to use standard servers to handle workloads previously requiring specialized hardware, delivering edge and data center performance improvements cost-effectively.

Multi-GPU Connectivity

-

Third-Generation NVLink

Linking two A30X cards with NVLink doubles memory capacity and scales performance via GPU-to-GPU communication up to 200 GB/s bidirectional bandwidth. NVLink bridges accommodate motherboards with standard or wide PCIe slot configurations.

Comprehensive Software Ecosystem

-

Virtual GPU and Compute Virtualization

Support for NVIDIA AI Enterprise on VMware and NVIDIA Virtual Compute Server accelerates virtualized HPC, AI, data science, and analytics workloads efficiently.

-

AI Frameworks and Libraries

Compatible with popular deep learning frameworks like TensorFlow, MXNet, and Caffe2, and optimized GPU libraries such as cuDNN, cuBLAS, and TensorRT for enhanced training and inference performance.

-

NVIDIA CUDA Platform

Provides support for mainstream programming languages and APIs including C/C++, Fortran, OpenCL, and DirectCompute, enabling accelerated computation across various domains like ray tracing and video processing.

NVIDIA A30X Specifications

Product

|

NVIDIA A30X Converged Accelerator |

Architecture

|

Ampere |

Process Size

|

7nm | TSMC |

Transistors

|

54.2 Billion |

Die Size

|

826 mm2 |

Peak FP64

|

5.2 TFLOPS |

Peak FP64 Tensor Core

|

10.3 TFLOPS | Sparsity |

Peak FP32

|

10.3 TFLOPS |

TF32 Tensor Core

|

82.6 TFLOPS | Sparsity |

Peak FP16 Tensor Core

|

165 TFLOPS | Sparsity |

Peak INT8 Tensor Core

|

330 TOPS | Sparsity |

GPU Memory

|

24 GB HBM2e |

Memory Bandwidth

|

1223 GB/s |

NVLink

|

Third-Generation | 200 GB/s Bidirectional |

Multi-Instance GPU Support

|

4 MIGs at 6 GB Each

2 MIGs at 12 GB Each

1 MIG at 24 GB |

Media Engines

|

1 Optical Flow Accelerator (OFA)

1 JPEG Decoder (NVJPEG)

4 Video Decoders (NVDEC) |

Interconnect

|

PCIe Gen4 (x16 Physical, x8 Electrical | NVLink Bridge) |

Networking

|

2x 100 Gbps ports, Ethernet or InfiniBand |

Integrated DPU

|

NVIDIA BlueField-2

Implements NVIDIA ConnectX-6 DX Functionality

8 Arm A72 Cores at 2 GHz

Implements PCIe Gen4 Switch |

NVIDIA Enterprise Software

|

NVIDIA vCS (Virtual Compute Server)

NVIDIA AI Enterprise |

Form Factor

|

2-Slot, Full Height, Full Length (FHFL) |

Thermal Solution

|

Passive |

Maximum Power Consumption

|

230 W |

Accessories

- RTXA6000NVLINK-KIT: NVLink connector kit for A30X to support standard PCIe slot spacing motherboards (application support required).

Supported Operating Systems

- Windows Server 2012 R2, 2016, 2019

- RedHat CoreOS 4.7

- Red Hat Enterprise Linux (7.7 to 8.3)

- SUSE Linux Enterprise Server 12 SP3+ and 15 SP2

- Ubuntu 14.04 LTS through 20.04 LTS