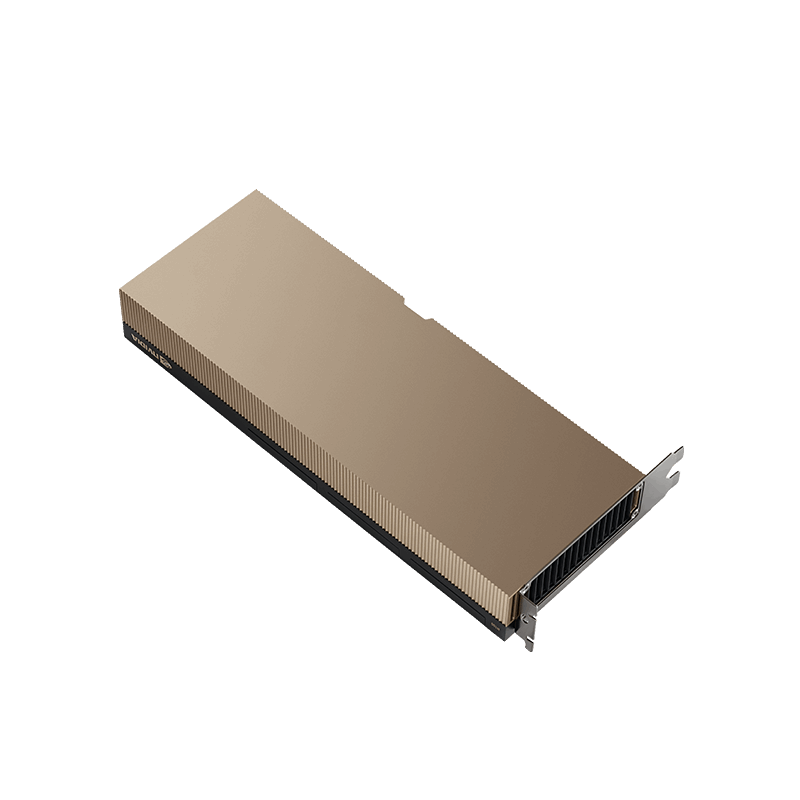

Unified Compute and Networking for Next-Generation Workloads

The NVIDIA A100X Converged Accelerator seamlessly integrates the computational power of the NVIDIA A100 Tensor Core GPU with the advanced networking and security capabilities of the NVIDIA BlueField-2 Data Processing Unit (DPU). This powerful combination is purpose-built for I/O-intensive, GPU-accelerated workloads such as massive MIMO 5G processing, AI-on-5G infrastructure, multi-node training, and real-time signal processing applications.

By unifying NVIDIA’s high-performance GPU architecture with the intelligence of SmartNIC and DPU technologies in a single, efficient architecture, the A100X enables exceptional throughput, security, and scalability from core data centers to edge computing environments.

Key Performance Specifications

| Metric |

Value |

| Peak FP64 |

9.9 TFLOPS |

| Peak FP64 Tensor Core |

19.1 TFLOPS (with sparsity) |

| Peak FP32 |

19.9 TFLOPS |

| TF32 Tensor Core |

159 TFLOPS (with sparsity) |

| FP16 Tensor Core |

318.5 TFLOPS (with sparsity) |

| INT8 Tensor Core |

637 TOPS (with sparsity) |

| Multi-Instance GPU (MIG) |

Supported, up to 7 isolated instances |

| GPU Memory |

80 GB HBM2e |

| Memory Bandwidth |

2,039 GB/s |

| Interconnect Options |

PCIe Gen4 (x16 physical, x8 electrical) / NVLink Bridge |

| Networking |

Dual 100 Gbps Ethernet or InfiniBand ports |

| Form Factor |

Dual-slot, full-height, full-length |

| Thermal Design |

Passive cooling solution |

| Max Power Consumption |

300 W |

Breakthrough 5G Acceleration

NVIDIA A100X sets a new standard for 5G infrastructure by eliminating data path inefficiencies. With the BlueField-2 DPU managing I/O directly, data no longer needs to traverse the host system’s PCIe bus reducing latency and increasing throughput dramatically. This architecture supports higher subscriber densities and more efficient network utilization.

The A100X is fully compatible with the

NVIDIA Aerial SDK, a comprehensive framework for building cloud-native, software-defined 5G networks. Aerial accelerates signal and data processing tasks within virtualized RAN (vRAN) environments, providing a programmable PHY layer and the ability to integrate L2+ network functions. Together, A100X and Aerial deliver a high-performance, GPU-accelerated platform ready for commercial off-the-shelf (COTS) deployment through solutions like

NVIDIA EGX offering Kubernetes-based container orchestration for streamlined deployment and management.

Available as a downloadable ZIP package or an NVIDIA NGC container image, the A100X-Aerial solution empowers telecom providers and enterprises to build scalable, GPU-accelerated 5G vRAN platforms with greater flexibility and speed. Early access to Aerial is available now.

Enterprise AI on 5G From Core to Edge

The NVIDIA A100X bridges enterprise AI and private 5G in a single converged solution. By integrating with the NVIDIA EGX platform, A100X enables deployment of AI applications on private 5G networks powered by connected devices (e.g., sensors, actuators, and edge servers). A100X-based systems function as “edge data centers in a box,” delivering robust AI inference, training, and software-defined 5G capabilities in one compact footprint.

Organizations can consolidate AI compute and 5G RAN infrastructure into a unified platform enabling new use cases across manufacturing, retail, automotive, smart cities, and government. The AI-on-5G platform redefines edge intelligence with high availability, security, and scalability, while creating new economic value through digital transformation.

Unparalleled GPU Performance

Based on the NVIDIA Ampere architecture, the A100X delivers industry-leading performance for AI, high-performance computing (HPC), and data analytics. With Multi-Instance GPU (MIG) technology, a single A100 GPU can be securely partitioned into up to seven independent instances. Each MIG instance operates in isolation, allowing shared usage across diverse workloads in both virtualized and bare-metal deployments ensuring optimal resource utilization and workload isolation.

Advanced Networking and Security

The BlueField-2 DPU combines with NVIDIA’s ConnectX SmartNIC family to offer state-of-the-art networking, storage, and security offloading. By handling infrastructure services outside the host CPU, it offloads processing, isolates workloads, and accelerates operations to enable secure, efficient data centers.

Integrated PCIe Switch for Efficiency

A key feature of the A100X is its onboard PCIe Gen4 switch, enabling direct GPU-DPU communication without involving the host PCIe bus. This architecture improves data movement efficiency and minimizes latency, maximizing throughput for data-intensive applications.

Enterprise-Ready Virtualization Support

With MIG, GPU resources can be dynamically allocated and scheduled across multiple users, supporting Kubernetes, containers, and hypervisors. This ensures right-sized GPU allocation with guaranteed Quality of Service (QoS) for every application or container, optimizing infrastructure usage and enabling cloud-native deployments.

Converged Accelerator Benefits

Unified Performance and Security

The A100X converged accelerator combines the NVIDIA A100 GPU with BlueField-2 DPU in a compact, single-slot solution. This convergence brings unmatched performance and embedded security ideal for enterprise data centers, edge computing, telecommunications, and network firewall or analytics workloads.

Predictable, Bottleneck-Free Performance

By interconnecting GPU and DPU via an integrated PCIe Gen4 switch, the A100X ensures dedicated, high-bandwidth pathways between compute and network resources. This eliminates PCIe bus contention and enables consistent performance, which is critical for real-time and latency-sensitive applications such as 5G signal processing.

End-to-End Data Protection

BlueField-2 enables inline data encryption and isolation at the network interface level. Sensitive data generated at the edge can be securely transferred without passing through the host system, minimizing exposure to network threats and enhancing overall infrastructure security.

AI-Driven Networking Intelligence

NVIDIA A100X leverages AI to enhance networking functions such as intrusion detection, data leak prevention, and traffic pattern prediction. With BlueField-2 supporting ConnectX-6 Dx functionality, the GPU can directly analyze and respond to network traffic, enabling smarter, real-time network management.

Cost-Effective Deployment

By integrating GPU, DPU, and PCIe switching in a single form factor, the A100X enables high-end performance using standard servers eliminating the need for specialized or costly infrastructure. Even edge deployments can benefit from accelerated computing without additional complexity.

Multi-GPU Scaling

Third-Generation NVIDIA NVLink Support

The A100X supports NVLink bridges for high-bandwidth GPU-to-GPU communication enabling data sharing between two A100X cards at up to 600 GB/s bidirectional bandwidth. NVLink bridges are compatible with motherboards supporting standard or wide-slot spacing, allowing for flexible deployment in various chassis configurations.

NVIDIA AI Enterprise & Virtual GPU Software

A100X supports NVIDIA AI Enterprise software and Virtual Compute Server (vCS) solutions for accelerating virtualized AI, HPC, and big data workloads. These tools bring consistent performance and seamless manageability across data centers and cloud-native environments.

Optimized Deep Learning Frameworks

A100X supports leading AI frameworks such as TensorFlow, PyTorch, MXNet, Caffe2, and CNTK ensuring optimal multi-GPU training performance. NVIDIA software libraries like cuDNN, TensorRT, and cuBLAS maximize throughput and minimize latency for inference and HPC workloads.

CUDA Parallel Computing Platform

Developers can leverage industry-standard programming languages (C/C++, Fortran) and parallel APIs (OpenACC, OpenCL, DirectCompute) using the CUDA platform. This facilitates the acceleration of a wide array of compute-intensive tasks including scientific simulation, ray tracing, and image or video processing.